Baldur's Gate: Multinomial Edition

clux April 12, 2022 [gaming] #math #linuxIn a brief bout of escapism from the world and responsibilities, I booted up Baldur's Gate 2 with my brother. It's an amazing game, once you have figured out how to roll your character.

For today's installment; rather than telling you about the game, let's talk about the maths behind rolling a 2e character for BG2, and then running simulations with weird X-based linux tools.

Rolling a character

The BG2 character generation mechanics is almost entirely based on the rules from AD&D 2e. You get 6 ability scores, and each ability score is rolled as the sum of 3d6.

Probablistically; this should give you a character with an expected

63total ability points (as a result of rolling18d6).

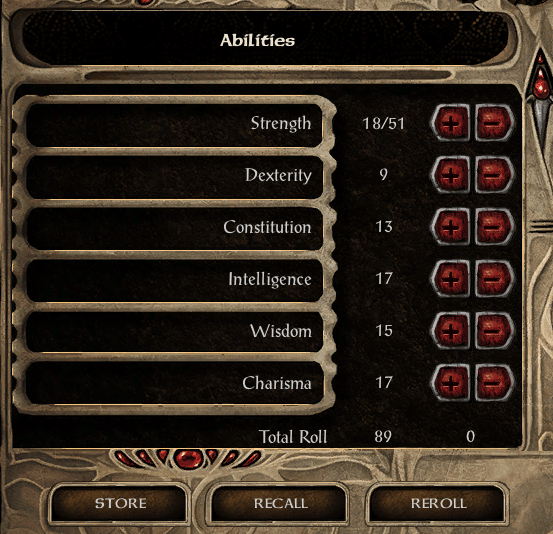

Mechanically, you are in this screen:

...and they have given you a reroll button.

It's a strange design idea to port the rolling mechanics from d&d into this game. In a normal campaign you'd usually get one chance rolling, but here, there's no downside to keeping going; encouraging excessive time investment (the irony in writing a blog post on this is not lost on me). The character creation in BG2 would probably have been less perfection focused if they'd gone for something like 5e point buy.

Anyway, suppose you want learn how to automate this, or you just want to think about combinatorics, multinomials, and weird X tools for a while, then this is the right place. You will also figure out how long it's expected to take to roll high.

HINT: ..it's less time than it took to write this blogpost

Disclaimer

Using the script used herein to achieve higher rolls than you have patience for, is on some level; cheating. That said, it's a fairly pointless effort:

- this is an old, unranked rpg with difficulty settings

- having dump stats is not heavily penalized in the game

- early items nullify effects of common dump stats (19 STR girdle or 18 CHA ring)

- you can get max stats in 20 minutes with by abusing inventory+stat underflow

- some NPCS come with gear that blows marginally better stats out of the water

So assuming you have a reason to be here despite this; let's dive in to some maths.

Multinomials and probabilities

How likely are you to get a 90/95/100?

The sum of rolling 18 six-sided dice follows an easier variant of the multinomial distribution where we have equal event probabilities. We are going to follow the simpler multinomial expansion from mathworld/Dice for s=6 and n=18 and find $P(x, 18, 6)$ which we will denote as $P(X = x)$; the chance of rolling a sum equal to $x$:

$$P(X = x) = \frac{1}{6^{18}} \sum_{k=0}^{\lfloor(x-18)/6\rfloor} (-1)^k \binom{18}{k} \binom{x-6k-1}{17}$$ $$ = \sum_{k=0}^{\lfloor(x-18)/6\rfloor} (-1)^k \frac{18}{k!(18-k)!} \frac{(x-6k-1)!}{(x-6k-18)!}$$

If we were to expand this expression, we would get 15 different expressions depending on how big of an $x$ you want to determine. So rather than trying to reduce this to a polynomial expression over $p$, we will paste values into wolfram alpha and tabulate for $[18, \ldots, 108]$.

You can see the appendix for the numbers. Here we will just plot the values:

and with the precise distribution we can also calculation expectation and variance:

- $E(X) = 63$

- $Var(X) = 52.5 \thickapprox 7.24^2$

This is how things should look on paper. From the chart you can extract:

108would be a once in101 trillion($6^{18}$) event107would be a once in5 trillionevent (6^18/18)106would be a once in600 billionevent (5s in two places, or 4 in one place)105would be a once in90 billionevent (3x5s, or 1x4 and 1x5, or 1x3)104would be a once in16 billionevent (4x5s, 2x5s and 1x4, 2x4s, 1x5 and 1x3, 1x2)103would be a once in4 billionevent (...)102would be a once in1 billionevent101would be a once in290 millionevent100would be a once in94 millionevent99would be a once in32 millionevent- $\ldots$

95would be a once in900kevent (first number with prob < 1 in a million)

But is this really right for BG? A lot of people have all rolled nineties in just a few hundred rolls, and many even getting 100 or more..was that extreme luck, or are higher numbers more likely than what this distribution says?

Well, let's start with the obvious:

The distribution is censored. We don't see the rolls below $75$.

What's left of this cutoff actually accounts for around 94% of the distribution. If the game did not do this, you'd be as likely getting 36 as a 90. We are effectively throwing away "19 bad rolls" on every roll.

AD&D 2ehad its own ways to tilt the distribution in a way that resulted in more powerful characters.

How such a truncation or censoring is performed is at the mercy of the BG engine. We will rectify the distribution by scaling up the truncated version of our distribution, and show that this is correct later.

Here we have divided by the sum of the probabilities of the right hand side of the graph $P(X \ge 75)$ to get the new probability sum to 1.

Using this scaled data, we can get precise, truncated distribution parameters:

- $E(X_T) = 77.525$

- $Var(X_T) = 2.61^2$

This censored 18d6 multinomial distribution is actually very close for certain cases, and we will demonstrate this.

But first, we are going to need to press the reroll button a lot...

Automating Rolling

The simulation script / hacks we made is found here.

Tools

We are playing on Linux with X and some obscure associated tooling:

scrot- X screenshot utilityxdotool- X CLI automation toolxwininfo- X window information utility

Basic strategy;

- find out where buttons are with

xwininfo - press the

rerollbutton withxdotool - take screenshot of the

totalnumber withscrot - compare screenshot to previous rolls

- press

storewhen a new maximum is found

The script also does some extra stuff to determine the strength roll, but that's not relevant here.

Initialization

To standardise what we are taking screenshots of, we need a consistent frame of reference.

xwininfo will give us the x,y coordinates of the top left corner of the game window, and then hard-code the offsets from that because the game has a consistent layout. There is some complexity in doing this, but it has so far worked well.

Caveat: You need to have scaled the window to size >= 1024x768 to avoid UI downscaling.

The standardised approach also helps with dealing with rolls, and it let us populate a roll-table quickly.

Roll Tables

Taking screenshots is pretty easy. Use scrot at an x,y coordinate followed by lenghts; ,width,height as remaining arguments defining the square to screenshot:

the output of this can be piped to a .webpwebpd passed to compare (part of imagemagick package), to compare values based on thresholds. However, this idea is actually overkill..

The menu background is static and the resulting screenshots are actually completely deterministic per value, so we can instead just compare them by their hashes in one big switch (i.e. after piping to md5) and use that as our roll table, Excerpt:

) CURRENT_ROLL=90 ;;

) CURRENT_ROLL=91 ;;

) CURRENT_ROLL=92 ;;

) CURRENT_ROLL=93 ;;

) CURRENT_ROLL=94 ;;

) CURRENT_ROLL=95 ;;

) CURRENT_ROLL=96 ;;

) CURRENT_ROLL=97 ;;

) CURRENT_ROLL=98 ;;

) CURRENT_ROLL=99 ;;

) CURRENT_ROLL=100 ;;

Clicking

Automating a click is simply:

where the --delay=0 overrides a builtin delay between clicks.

The only complication here is that BG performs internal buffering of clicks, so this allows us to blast through numbers faster than the screen can display them. This means we have to compensate with a sleep 0.001 after clicking to ensure we can grab the scrot of the roll before another click is registered.

Showcase

Running the script (with a terminal showing the script output overlayed) looks like this:

On my machine, we get just over 15 rolls per second.

Sampling

We rolled a human fighter, paladin, and a ranger overnight with roughly half a million rolls each (see appendix), and we got these values:

Let's start with the fighter. If we compare the fighter graph with our precise, censored multinomial distribution, they are very close:

So for fighters, we can be pretty happy with the calculations we have done, and can use the precise probabilities as a guide.

How long would it take you to achieve >95 for a fighter using the script?

108is a once in5 trillionevent (10,000 years)107is a once in300 billionevent (600 years)106is a once in33 billionevent (69 years)105is a once in5 billionevent (10 years)104is a once in900 millionevent (2 years)103is a once in200 millionevent (5 months)102is a once in50 millionevent (5 weeks)101is a once in16 millionevent (2 weeks)100is a once in5 millionevent (4 days)99is a once in2 millionevent (1.5 day)98is a once in700kevent (12h)97is a once in270kevent (5h)96is a once in110kevent (2h)95is a once in50kevent (55m)

So if we only look at human fighters or mages, we can stop here:

We are actually quite a lot more likely to get a good roll early than what just the pure dice math would indicate thanks to censoring (50k rolls => likely

95rather than estimated90without censoring).

However, what's up with the paladins and rangers? Time for a more painful math detour.

Class Variance

The reason for the discrepancy is simple: stat floors based on races/class.

These stat floors are insignificant in some cases, but highly significant in others. Some highly floored classes actually push the uncensored mean above the 75 cutoff even though it's a whole 12 points above the mean of the original underlying distribution.

The floors for a some of the classes:

- fighter mins:

STR=9, rest3 - mage mins:

INT=9, rest3 - paladin mins:

CHA=17,WIS=13,STR=12,CON=9, rest3 - ranger mins:

CON=14,WIS=14,STR=13,DEX=13, rest3 - other classes mins: generally light floors

In other words: paladins and rangers have significantly higher rolls on average.

Sidenote: in

2eyou actually rolled stats first, and only if you met the requirements could you become a Paladin / Ranger. It's an interesting choice. Would not call this fun.

Anyway. Is it possible to incorporate these floors into our modelling?

Ability Distributions

If floors are involved at earlier stages, we have to take a step back and look at the distributions that make up the sum. We can compute distributions for individual ability scores (even if floored) if we use the distribution for $P(x, 3, 6)$ from mathworld/Dice where s=6 and n=3 and censor it at a cutoff point similar to how we censor the total distribution.

Computing the value without a floor follows the same setup as when we did 18 dice; use wolfram alpha and tabulate for $[3, \ldots, 18]$:

then we truncate + scale this at the observed floor points 9, 12, 13, 14, and 17:

To avoid having to write out conditionals $P(X = x| X\ge k)$ everywhere we will denote $X^{\lfloor k \rfloor}$ as one of these graphed multinomial distributions for the sum of 3d6 floored at $k$:

$$X^{\lfloor k \rfloor} \sim \mathcal{M}^{\lfloor k \rfloor}(3d6)$$

Note also that an unfloored ability score $X$ is equal to $X^{\lfloor3\rfloor}$.

We can then compute precise conditional expectations by floor:

- $\mathbb{E}(X^{\lfloor3\rfloor}) = \mathbb{E}(X) = 3\sum_{k=1}^{6}\frac{k}{6} = 3*3.5 = 10.5$

- $\mathbb{E}(X^{\lfloor9\rfloor}) = 11.8125$

- $\mathbb{E}(X^{\lfloor12\rfloor}) = 13.5555$

- $\mathbb{E}(X^{\lfloor13\rfloor}) = 14.2500$

- $\mathbb{E}(X^{\lfloor14\rfloor}) = 15.0000$

- $\mathbb{E}(X^{\lfloor17\rfloor}) = 17.2500$

Similarly, we can compute precise variances:

- $Var(X^{\lfloor3\rfloor}) = Var(X) = 3\sum_{k=1}^6\frac{(x_i - 3.5)^2}{6} = 3*2.92 = 8.75$

- $Var(X^{\lfloor9\rfloor}) = 4.5773$

- $Var(X^{\lfloor12\rfloor}) = 2.2469$

- $Var(X^{\lfloor13\rfloor}) = 1.6875$

- $Var(X^{\lfloor14\rfloor}) = 1.2000$

- $Var(X^{\lfloor17\rfloor}) = 0.1875$

Sum of Ability Distributions

To get a distribution for the total score, we need to create a sum distribution of the distributions for their six base stats at their given class floors.

Define $Z_{paladin}$, $Z_{ranger}$ and $Z_{fighter}$ as:

$$Z_{paladin} = X_1^{\lfloor17\rfloor} + X_2^{\lfloor13\rfloor} + X_3^{\lfloor12\rfloor} + X_4^{\lfloor9\rfloor} + X_5^{\lfloor3\rfloor} + X_6^{\lfloor3\rfloor}$$ $$Z_{ranger} = X_1^{\lfloor14\rfloor} + X_2^{\lfloor14\rfloor} + X_3^{\lfloor13\rfloor} + X_4^{\lfloor13\rfloor} + X_5^{\lfloor3\rfloor} + X_6^{\lfloor3\rfloor}$$ $$Z_{fighter} = X_1^{\lfloor9\rfloor} + X_2^{\lfloor3\rfloor} + X_3^{\lfloor3\rfloor} + X_4^{\lfloor3\rfloor} + X_5^{\lfloor3\rfloor} + X_6^{\lfloor3\rfloor}$$

for floored 3d6 based random variables $X_i^{\lfloor N \rfloor} \sim \mathcal{M}^{\lfloor N \rfloor}(3d6)$.

Using the computed expectations above to sum across the 6 main stats:

- $\mathbb{E}(Fighter) = \mathbb{E}(X^{\lfloor9\rfloor}) + 5\mathbb{E}(X) = 64.31$

- $\mathbb{E}(Ranger) = 2\mathbb{E}(X^{\lfloor14\rfloor}) + 2\mathbb{E}(X^{\lfloor13\rfloor}) + 2\mathbb{E}(X) = 79.5$

- $\mathbb{E}(Paladin) = \mathbb{E}(X^{\lfloor17\rfloor}) + \mathbb{E}(X^{\lfloor13\rfloor}) + \mathbb{E}(X^{\lfloor12\rfloor}) + \mathbb{E}(X^{\lfloor9\rfloor})+ 2\mathbb{E}(X) = 77.86$

and similarly for variance:

- $Var(Fighter) = Var(X^{\lfloor9\rfloor}) + 5Var(X) \thickapprox 6.95^2$

- $Var(Ranger) = 2Var(X^{\lfloor14\rfloor}) + 2Var(X^{\lfloor13\rfloor}) + 2Var(X) \thickapprox 4.82^2$

- $Var(Paladin) = Var(X^{\lfloor17\rfloor}) + Var(X^{\lfloor13\rfloor}) + Var(X^{\lfloor12\rfloor}) + Var(X^{\lfloor3\rfloor})+ 2Var(X) \thickapprox 5.12^2$

noting that variables are independent under the observed two stage censoring.

Why can we rely on two stage censoring and their independence? If any of these internal mechanisms used some kind of

ifcondition orminfunction, it would be immediately obvious from the distribution. The paladin distribution of charisma is clearly a~1/4for an18, and~3/4for a17; it would have been much rarer to see an 18 otherwise.

Thus, the distributions of our classes are based on multinomal-based distributions with the following first moments:

- $Uncensored\ Fighter \sim \mathcal{M}(\mu = 64.31, \sigma^2 = 6.95^2)$

- $Uncensored\ Ranger \sim \mathcal{M}(\mu = 79.5, \sigma^2 = 4.82^2)$

- $Uncensored\ Paladin \sim \mathcal{M}(\mu = 77.86, \sigma^2 = 5.12^2)$

This is nice as a quick overview of what's best in the higher ranges, but it's not very precise. Without the PMF for the sum of our ability scores, it's hard to give good values for what the truncated version will look like (we are censoring in two stages). In particular, these heavily floored random variables end up giving us quite asymmetrical distributions in the tails.

Class Distributions

Thankfully, it is possible to inductively compute the pmf of $Z_{class}$ via convolution.

Some internet digging notwithstanding; most answers found online for this required either mathematica functions (that we do not have here in our inlined source), or a slightly more laborious manual convolution. We will follow the inductive convolution approach which we can solve with recursion. Paladin case:

Let $X_{12} = X_1^{\lfloor17\rfloor} + X_2^{\lfloor13\rfloor}$. We can generate values for the pmf $p_{X_{12}}$ for $X_{12}$ via the pmfs $p_{X_i}$ for $X_1^{\lfloor17\rfloor}$ and $X_2^{\lfloor13\rfloor}$ via the convolution formula:

$$P(X_{12} = n) = \ (p_{X_1} * p_{X_2})(n) = \sum_{m=-\infty}^{\infty}P(X_1=m)P(X_2 = n-m)$$

This step is particularly easy for the paladin, because $X_1^{\lfloor17\rfloor}$ only takes two values (i.e. $m=17$ ahd $m=18$ are the only non-zero parts in the sum).

The rest is more laborious to do by hand, as the sums get increasingly large while we iterate towards $Z=P_{123456}$ by repeatedly applying convolution to the remaining $X_i$:

$$P(X_{123} = n) = \ (p_{X_{12}} * p_{X_3})(n) \sum_{m=-\infty}^{\infty}P(X_{12}=m)P(X_3 = n-m)$$

$$P(X_{1234} = n) = \ (p_{X_{123}} * p_{X_4})(n) \sum_{m=-\infty}^{\infty}P(X_{123}=m)P(X_4 = n-m)$$

$$P(X_{12345} = n) = \ (p_{X_{1234}} * p_{X_5})(n) \sum_{m=-\infty}^{\infty}P(X_{1234}=m)P(X_5 = n-m)$$

$$P(X_{123456} = n) = \ (p_{X_{12345}} * p_{X_5})(n) \sum_{m=-\infty}^{\infty}P(X_{12345}=m)P(X_6 = n-m)$$

The hard work is correctly matching indexes in our probability arrays that serve as our mass functions to the sum, and defaulting to zero when accessing out of bounds:

// given pXi = [0.75, 0.25], pXj = [0.375, 0.2678, 0.1786, 0.1071, 0.05357, 0.01786]

// (approximations of the first two truncated paladin probability arrays for CHA + WIS)

;

// returns [0.28125, 0.29464, 0.2009, 0.125, 0.06696, 0.02678, 0.004464]

Using this, we can compute the PMF for $Z_{class} = X_{123456}$:

$$P(Z_{class} = z) = \ (((((p_{X_1} * p_{X_2}) * p_{X_3}) * p_{X_4}) * p_{X_5}) * p_{X_6}) (z)$$

and we graph them for various classes:

Notice the heavily tilted ranger/paladin distributions whose lean is distinctively more to the right.

The last thing that's left now is to rectify $Z_c$ at 75 to get our true, final distributions:

These match the sampled data almost perfectly as can be seen in more detailed comparisons in the appendix.

As can be seen; ranger is faster at getting high numbers, particularly in the 90 -> 97 range, but if you want rolls >= 100, paladin rolls the highest at the fastest rate.

We end with the expected time to roll above a certain threshold where we use the most efficient class based on the number:

108paladin rolls once in100 billion⇒ 210y107paladin rolls once in5 billion⇒ 10y106paladin rolls once in600 million⇒ 1y105paladin rolls once in100 million⇒ 11w104paladin rolls once in19 million⇒ 2w103paladin rolls once in5 million⇒ 4d102paladin rolls once in1.4 million⇒ 1d101paladin rolls once in400k⇒ 7h100paladin/ranger rolls once in150k⇒ 3h99ranger rolls once in57k⇒ 1h98ranger rolls once in23k⇒ 25m97ranger rolls once in10k⇒ 11m96ranger rolls once in5k⇒ 5m95ranger rolls once in2k⇒ 2m

Hope you have enjoyed this random brain dump on probability. Don't think I have ever been nerd sniped this hard before.. I just wanted to play a game and take a break.

/me closes 20 tabs

Appendix

1. Raw simulation data

10 hour paladin roll (555558 rolls in 601m)

75: 50888

76: 54911

77: 57338

78: 57442

79: 55589

80: 52357

81: 47503

82: 41339

83: 34458

84: 28599

85: 21997

86: 16722

87: 12322

88: 8697

89: 5997

90: 3774

91: 2371

92: 1489

93: 822

94: 465

95: 251

96: 129

97: 56

98: 24

99: 12

100: 4

101: 1

102: 1

10 hour fighter roll (555560 rolls in 608m)

75: 137379

76: 109198

77: 85620

78: 65004

79: 48256

80: 35041

81: 24987

82: 17545

83: 11981

84: 7883

85: 5007

86: 3139

87: 1946

88: 1138

89: 670

90: 368

91: 199

92: 103

93: 49

94: 26

95: 12

96: 6

97: 2

98: 1

9 hour ranger roll (500054 rolls in 548m)

75: 32296

76: 37790

77: 43118

78: 46609

79: 48108

80: 47589

81: 45774

82: 41963

83: 36876

84: 30973

85: 25272

86: 19904

87: 14730

88: 10430

89: 7285

90: 4667

91: 2991

92: 1696

93: 986

94: 529

95: 254

96: 121

97: 56

98: 26

99: 10

100: 1

2. Tabulated values for 18 dice multinomial probability distribution

18 : 1/101559956668416

19 : 1/5642219814912

20 : 19/11284439629824

21 : 95/8463329722368

22 : 665/11284439629824

23 : 1463/5642219814912

24 : 33643/33853318889472

25 : 9605/2821109907456

26 : 119833/11284439629824

27 : 1552015/50779978334208

28 : 308465/3761479876608

29 : 97223/470184984576

30 : 2782169/5642219814912

31 : 1051229/940369969152

32 : 4550747/1880739938304

33 : 786505/156728328192

34 : 37624655/3761479876608

35 : 36131483/1880739938304

36 : 1206294965/33853318889472

37 : 20045551/313456656384

38 : 139474379/1253826625536

39 : 1059736685/5642219814912

40 : 128825225/417942208512

41 : 17143871/34828517376

42 : 8640663457/11284439629824

43 : 728073331/626913312768

44 : 2155134523/1253826625536

45 : 3942228889/1586874322944

46 : 4949217565/1410554953728

47 : 3417441745/705277476864

48 : 27703245169/4231664861184

49 : 3052981465/352638738432

50 : 126513483013/11284439629824

51 : 240741263447/16926659444736

52 : 199524184055/11284439629824

53 : 60788736553/2821109907456

54 : 2615090074301/101559956668416

55 : 56759069113/1880739938304

56 : 130521904423/3761479876608

57 : 110438453753/2821109907456

58 : 163027882055/3761479876608

59 : 88576807769/1880739938304

60 : 566880747559/11284439629824

61 : 24732579319/470184984576

62 : 101698030955/1880739938304

63 : 461867856157/8463329722368

64 : 101698030955/1880739938304

65 : 24732579319/470184984576

66 : 566880747559/11284439629824

67 : 88576807769/1880739938304

68 : 163027882055/3761479876608

69 : 110438453753/2821109907456

70 : 130521904423/3761479876608

71 : 56759069113/1880739938304

72 : 2615090074301/101559956668416

73 : 60788736553/2821109907456

74 : 199524184055/11284439629824

75 : 240741263447/16926659444736

76 : 126513483013/11284439629824

77 : 3052981465/352638738432

78 : 27703245169/4231664861184

79 : 3417441745/705277476864

80 : 4949217565/1410554953728

81 : 3942228889/1586874322944

82 : 2155134523/1253826625536

83 : 728073331/626913312768

84 : 8640663457/11284439629824

85 : 17143871/34828517376

86 : 128825225/417942208512

87 : 1059736685/5642219814912

88 : 139474379/1253826625536

89 : 20045551/313456656384

90 : 1206294965/33853318889472

91 : 36131483/1880739938304

92 : 37624655/3761479876608

93 : 786505/156728328192

94 : 4550747/1880739938304

95 : 1051229/940369969152

96 : 2782169/5642219814912

97 : 97223/470184984576

98 : 308465/3761479876608

99 : 1552015/50779978334208

100 : 119833/11284439629824

101 : 9605/2821109907456

102 : 33643/33853318889472

103 : 1463/5642219814912

104 : 665/11284439629824

105 : 95/8463329722368

106 : 19/11284439629824

107 : 1/5642219814912

108 : 1/101559956668416

3. Tabulated values for 3 dice multinomial probability distribution

3: 1/216

4: 1/72

5: 1/36

6: 5/108

7: 5/72

8: 7/72

9: 25/216

10: 1/8

11: 1/8

12: 25/216

13: 7/72

14: 5/72

15: 5/108

16: 1/36

17: 1/72

18: 1/216

4. Comparing observed vs. computed by class

We compare with the precise $Z_{class}$ distributions worked out by convolution above.

For fighter we have included the original uncorrected multinomial distribution for 18 dice before doing simulations. It was sufficiently close to the true distribution because flooring a single ability to 9 amounts to almost nothing in the right tail:

That said, the true distribution taking into account the single floored stat has a much better fit.

5. Normal approximations

An original idea here was to avoid doing all the faff with convolution above, and "just" approximate the distribution with some normal $\mathcal{N}(μ, σ)$.

After all, this is suggested for high n, and it will hold even for an unequal sum of independent random variables with sufficient degrees of freedom.

However, there are many complications with this approach:

- we only sample the doubly censored data, we don't see the full normal distribution

- distributions are heavily shifted (as can be seen with the true paladin distribution)

- estimation of underlying normal distribution relies difficult for classes whose means precedes the truncation point

It does looks like there are tools to work with truncated or rectified normals:

- extension methods for rectified normal distributions

- formulas for dealing with truncation of a normal distributions

- cnorm r library which comes with a giant pdf as documentation to help remind you of why we need rustdoc

But this felt like the wrong path to descend, and the path was littered with arcana:

ah, yes, just a vector. pic.twitter.com/RvkpS4h0DX

— eirik ᐸ'⧖ᐳ (@sszynrae) April 7, 2022

That said. If anyone wants to fill in something here, or link to alternate methods, feel free to PR in something here or write an issue.